Mission critical reporting feature sees successful renewal of $10m ARR.

Agile product development of a new reporting feature within a global, cross-functional team.

*Product designer

*Sep 2022 - Feb 2023

Role: Product designer, reporting to Head of Design.

Responsibilities: Discovery, Defining, Designing, Testing, Delivery

[Research, user testing, user journey mapping, wireframing, mockups, writing requirements, high fidelity prototyping, final design delivery]

Team structure: Design; Product Management; Engineering; Stakeholders (Customer Success, Data Analysts, Executive Suite).

Duration: September 2022 - Feb 2023 (6 months)

Project environment

Education platform proving their value to customers through accessible data.

Secure Code Warrior is a cybersecurity education SaaS B2B. Customers purchase SCW to improve their developers’ secure coding skills. They then validate this expenditure internally by monitoring the learning progression of their developers.

Customers were complaining there was no self-serviceable pathway for tracking their developers’ learning progress. Consequently customers representing $10m of revenue were threatening to churn.

My team was tasked with making metrics consistent and data easily accessible to our customers in order to enable their operations and prove the value of their investments of time and money in Secure Code Warrior.

Context

Exporting Course results (only available as raw CSV files)

Exporting Assessments results (only available as raw CSV files)

Team leaderboard results (only available as raw CSV files)

A fragmented, inaccurate, and overwhelming reporting experience was frustrating customers.

SCW offers a plethora of educational content - from personal education to competitive learning tournaments. But data was siloed and only accessible as raw data CSV exports. Customers were spending an inordinate amount of time and money parsing raw data into usable information.

Due to customer complaints about difficulty in accessing data, SCW customer success admins were spending 10+ hours per week building dashboards and reports for their clients. A google slide deck connecting to SCW’s API had been created to simplify this process but when delivered customers complained SCW data often did not match internal customer metrics, inciting serious discussion about data discrepancies.

Problem

Understanding the problem with current “developer status” definitions.

User personas and redefining reporting at SCW.

SCW defined developers’ status on activities as Invited, enrolled, or completed. But Invited had 3 different definitions making it impossible to accurately track.

Through surveys, internal stakeholder conversations, and customer interviews we defined the key user persona as Company Admins, whose core goal was check boxing and internal benchmarking.

This knowledge empowered us to fundamentally shift the understanding of reporting at SCW from an arbitrary definition of “invited”, to a binary of “completed” vs “not completed”.

This change was a major breakthrough because when implemented it solved our data discrepancy issues as well as delivering more value to our customers.

Discovery

Redefining engagement to a binary of completed/not completed.

Using data to roadmap deliverables.

The PM and I combined this new understanding with quantitative analysis of customer feedback in Productboard to roadmap the MVP and future deliverables.

We then held design workshops and SME interviews with internal stakeholders from customer success to c-suite executives, to define 3 key goals the reporting project should achieve:

A central repository with a single database - customers must have easy access to data in a single location.

Accurate data analysis - customers can interpret the data easily and use it to inform their internal decisions.

Future proofed user experience - current at risk customers must renew and new customers must not churn.

Process

Data coallation from surveys and Productboard feedback

105 companies included

252+ feedback items .

Top 40 companies by revenue (71 feedback items).

All companies with tenure >13 months (17 feedback items).

From scattered and flawed, to consolidated and accurate

After rigorous user interviews, usability testing, and iteration, we released a high fidelity MVP of the Engagement report to our 600+ customers.

The outcome was an easy to access, single location to find all Engagement reports. Following our new binary definitions to eliminate data discrepancies. A user could search for specific activities, teams, or dates, and see who had completed or not completed their assigned content.

Future releases would follow a schedule to see other types of reports added (e.g. Learning activities, CWE numbers, etc.).

Solution

Quickly search for relevant content.

A simple selection choice of content, team, and time, provided users powerful control over the data, enabling them to quickly search for specific activities.

This enabled them to identify courses with low completion rates, pinpointing developers still to finish those activities.

Check boxing simplified

The ability to locate individual developers, identifying all completed and not completed content was seen as extremely valuable, saving incredible amounts of time.

Company admins reported being able to complete a single activity that had previously taken them a week within 30 minutes.

Generate reports for every activity.

Providing users with a simple, consistent interface pattern creates familiarity, empowering them to explore the data as they feel confident in their abilities. This consequently encouraged secondary stakeholders such as executives to interact with the feature, enabling a new level of asynchronous reporting.

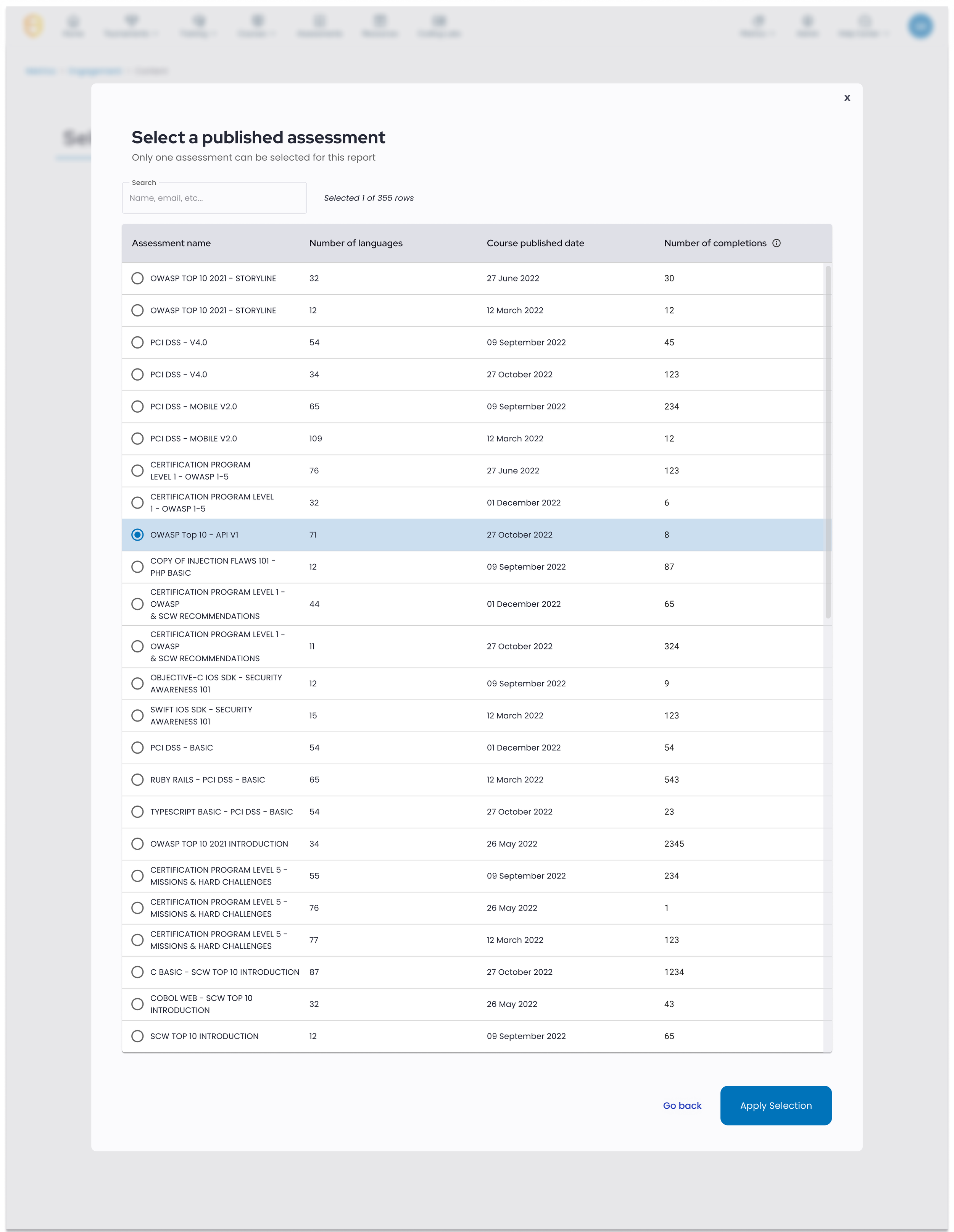

Selecting an engagement report type.

Selecting a specific activity to report.

MVP prototype

Customers worth $10m ARR who were previously threatening to churn all renewed their licences.

Company admins - core users - reported time savings of 7 hours per report.

SCW CSMs reclaimed 10 hours per week not needing to do reporting for customers.